Last month Scentre Group’s National CCTV Camera Shootout was held at Westfield in Bondi Junction. This is the largest CCTV camera shootout of its type in Australia, with the diverse mall environment giving attendees plenty to think about across 4 camera groups.

WE often rattle on about objective camera testing in SEN and we do this because there is absolutely nothing that highlights strengths and weaknesses of performance more clearly than lining up a group of cameras in generally identical circumstances and seeing which image looks best. The challenge is getting all the latest cameras in one place – even more difficult, providing a series of real world applications that allow adequate conditions for comparison.

Organised by Scentre Group, the National CCTV Camera Shootout was held at Westfield Bondi Junction. The shootout covered 4 categories including entry ID, common mall, micro dome and a new category, omnidirectional cameras, which were installed in the carpark in extremely challenging light conditions.

A particular difficulty for manufacturers participating in the Scentre Group CCTV Camera Shootout is that not only resolution but bandwidth are proscribed. We’ve seen at SecTech that manufacturers not corralled by specification have a tendency to shake out bitrate – sometimes hugely. Scentre Group is strict and this tests the cameras in realistic ways.

According to Scentre Group’s Simon Brett Edwards, everything reasonably possible and more had been done to keep things impartial between vendors and eliminate any unconscious bias.

“Cameras are installed at Scentre Group sites for 3 reasons – first comes public liability,” Edwards explains. “We need to ensure claims are legitimate – if a camera can show a person had a shoelace untied, or there was a spillage on the floor, that's an important attribute.

“Secondly, we use our surveillance systems for security. We are finding more and more use for video surveillance surrounding customer experience. We use LPR for vehicles driving into our malls in case they are stolen or customers can’t find their car. If you lose a child or laptop, we can find them by following their movements using our CCTV system. We have 550 million unique visits to our stores in Australia last year – we consider CCTV critical to our operations.”

Scentre Group’s performance yardstick is bolted to operational fundamentals.

“We look at how well you can differentiate bright colours outside and inside the centre – can operators see the road surface?” Yigal Shirin explains. “We look across the road at the fence – how straight is the fence in terms of lens distortion? How much pixellation is in the images under digital zoom? How much noise can we see. Sharpness is important and colour rendition, too. Something else we are concerned with is motion blur in areas of low light.”

According to Shirin, the parameters are universal for every camera in the test.

“For entry ID we stipulated resolution of 1080p, and a 2MB data stream,” he explains. “We provided a distance and target size, and we left it to the manufacturers to deliver the best camera with respect to WDR, backlight compensation, colour rendition."

Scentre Group's Simon Pollak

According to Simon Pollak, Scentre Group is broadly considering image quality, colour rendition, and whether the image is fit for purpose.

"If a camera does not meet these criteria, that’s a fail," Pollak says. "From there we look at each camera’s feature set and technical capabilities, finally we enter into commercial negotiations with a manufacturer and select one camera from each category for all our stores for the following 12 months.”

“As far as the way the shootout is going to go today, we have 4 categories and we’ll look at each of the cameras by category. The category I’m really excited about this year is omnidirectional – fisheye or multi-head – it’s the first time we’ve really looked at cameras with this capability and we have 6 cameras in that category,” he says. “After the shootout, Scentre Group offered contracts to those camera manufacturers whose products performed best."

We have SEN’s test target Norman doing a spot of shopping in the mall today and his presence will give us a good opportunity to look at colour rendition and differentiation, contrast, sharpness and license plates. This is exciting stuff for CCTV propeller-heads. As we run through the camera groups none of the visitors has a clue which camera is which. Given we test quite a few cameras at SEN something I’m personally keen to discover is whether our observations of camera performance are replicated.

The Editor’s Opinion

The whole idea of the shootout is to allow attendees to rate the cameras being tested in real time and here I’m going to give SEN’s opinion on which cameras we thought did best. Other people with different operational concerns, including Scentre Group, may have different opinions on which was the best camera in each of the categories under test. Other things to consider include the fact we viewed the test on 2 monitors – the monitor in the main space looked to be older and its performance was less bright and less sharp than the monitor to the left. The images I took (they are not screen grabs) were almost all from this monitor. Further, my camera is set to bracket, taking one shot at 1/200th of a second, the next at 1/400th of the second. This impacts on colour rendition. I selected the comparison images I thought were the best of each camera for consideration, regardless of my camera settings.

We start out looking at the entry cameras installed to secure face recognition of customers coming in through main doors around the mall perimeter. This is a tough application – there’s significant backlight to cope with yet the cameras are installed perhaps 15m from the entry way so they must balance exposure for internal and external scenes. This is difficult to do, demanding a combination of auto shutter and processing input and we see that some cameras manage it better than others. It’s rare to see a CCTV camera that will offer face recognition in an internal space, give good face recognition, then see through the entryway and out into the wide world. In this case, while the cameras are specifically tasked with snaring faces, getting a view of the footpath, the street, even across the street, offers considerable value for investigations.

All cameras are focused to a point just inside the entry. Camera 1 (Samsung SNB-6004P) is best with faces and natural colour rendition – the external view is over exposed, though the far side of the street is visible, it’s noisy. Camera 7 (Hikvision DS-2cd4025fwd) offers good face recognition, too, though colours are more saturated. It offers more balanced exposure across the street. Camera 2 (Panasonic WV-SPN631) offers good face recognition, though images are not as sharp and colours are more muted. It handles the external exposure well but its lens is generating widespread CAs.

Camera 6 (Axis M1125) is also offering usable face recognition and a very balanced exposure – probably the best of the whole group. But latitudinal and longitudinal CAs with this camera’s lens are costing sharpness – chromatic aberrations are 10-12 pixels deep – that’s way too high. There’s also some blur evident on movement inside the mall. Cameras 3 (Arecont AV2116DNV1), 4 (Sony SNC-EB630B) and 5 (Bosch Dinion IP 6000 Starlight HD) are exposing for the external scene and are giving face recognition outside but not inside the entry way.

With Norman in the doorway, Camera 1 is doing well. Camera 2 is doing the best in terms of face recognition and viewing across the road. Zooming in on detail of Norman’s contrast bars shows excellent sharpness and contrast separation. Camera 3 is great across the road but Norman is not rendered so well. There’s also some motion blur in pedestrians outside the door. Camera 4 is doing well with pedestrians but Norman’s face is in shadow within the entry as the camera is exposing for the external light level.

Camera 5 is doing extremely well over the road and reasonably well just inside the entry but things are a little dark internally. The closeup of Camera 6 on Norman’s contrast bars highlights the CAs being generated by this camera’s lens. The wide view has high levels of detail externally but the internal scene under digital zoom is soft and darker. Oh, Camera 7 is best with Norman, pedestrians and over the road in this moment in time, though there’s a hint of motion blur inside. Looking at camera performance outside the entry way as a priority, camera 7 is offering the best balance and camera 4 the best detail, colour rendition and contrast externally.

When we look at night performance, things get interesting and show the balancing act CCTV camera manufacturers, installers and end users must play with the laws of physics. Camera 1 is in colour but things are dark and there’s not a huge amount of external detail to be seen. Anything directly in the doorway will be discernible to a varying degree. Camera 2 is outstanding in low light. The image is bright, blur is low, it’s handling reflections off the glass doors well and we can see pedestrians and cars clearly on the entry side of the road, and all the way over the street.

Camera 3 has gone to night mode and its monochrome image is reasonable with static images but showing too much blur for recognition of pedestrians. Camera 4 is in lovely colour and is showing great rendition, sharpness, depth of field and low levels of blur. Reflections are well handled. This image is thoroughly court admissible. Camera 5 is giving very natural colour rendition but showing CAs. There’s good depth of field and mild motion blur which is costing face recognition but giving full detail of colour, skin colour, etc. Camera 5 is a doing well with colour and static objects but there’s some blur with walking pedestrians.

Camera 6 is another strong image – colour, low motion blur, strong depth of field and high tolerance for strong reflections. It’s getting face recognition without drama through the glass doors in areas of low reflection. Camera 7 is in colour but is the noisiest image of the group. Handling of reflections off the door is not quite as good as some others. It looks like we have face recognition along with full detail of clothing. Cameras 2 and 6 are the pick here.

General Mall Domes

Next, we look at general mall domes. It’s a typical tough mall application. Deep field of view, wide angle of view, complex scenes, lots of movement, highly reflective floors, variable lighting, with multiple hotpoints. All these wide angle domes have considerable barrel distortion – I’m going to guess it’s between 12-15 per cent for most. Wide angles on standard HD resolutions make for pixel spread, which makes for softer images. The laws of physics cannot be un-made.

Furthermore, lens quality with compact domes tends to be less exacting and there’s interference from dome bubbles. All these factors make it tough to pick the best performance. You wind up selecting on the basis of something obvious in the performance – obviously higher resolutions or low blur levels. Finer details are not so easy to discern and the scenes are so complicated they always incorporate good and bad elements of performance.

Camera 1 (Panasonic WV-SFN531) is showing a cool blue colour rendition. Angle of view is wide. There are CAs costing sharpness as you digitally zoom into the scene. The floors are causing mild overexposure. There’s court admissible face recognition towards 15m that is better closer to the lens and softens as you go in. Camera 2 (Pelco IMP229-1IS) is giving more detail through improved contrast and lower CAs – the image itself is similarly soft. There’s face recognition towards 15m but it’s never sharp. Handling of strong light is better. Depth of field is better. The operator digitally zooms in on a cup of coffee on a table at around 10m from the lens and you know what the shape is but there’s no specific detail.

Camera 3’s (Bosch Flexidome IP 5000 Indoor HD) image is a little soft throughout – including close up – but deeper in it shows slightly more detail than the other 2 we’ve viewed. I would put this down to a lessor lens and a higher resolution. The image is a bit unbalanced, being in parts darker and in parts overexposed. The zoomed image also reveals some motion blur. Camera 4 (Arecont AV2255PMIR-SH) is reasonable in the foreground, a little over-exposed with the tiles. Face recognition is lost before 12m but depth of field is reasonably good. Blur seems low. The digital zoom shows that good colour rendition and contrast are covering for a little softness here. There’s plenty to see in the background. It’s a deep scene, remember – 35m to the back of the supermarket.

Camera 5 (Samsung SND-6083) has more barrel distortion, low blur, strong colour rendition and good contrast. It’s still soft, even close to the lens. You are not getting sharp face recognition from any of these domes even at quite close ranges. There’s some over-exposure in the image and it’s less sharp in the middle distance. The zoom shows this again – once inside the store, you’re not getting a lot of detail.

Camera 6 (Axis P3225-V) has a cool blue colour temperature. It’s handling reflection from the floor tiles the best, handling lights the best and has the highest resolution and sharpness. Barrel distortion is strong-ish at full wide. There are some CAs but detail is the highest so far. Colour balance is the best, too. The scene is rendered very evenly without much in the way of processing noise. The digital zoom confirms that detail levels are highest with this camera and that blur is well controlled.

Camera 7 (Sony SNC-EM630) is also a strong performer. Blur is low. Resolution is high. Detail is high. There’s some processing noise in the image. Barrel distortion is low. Sharpness is good close in and holds towards 20 metres. Digital zoom confirms the high levels of detail. Camera 8 (Hikvision DS-2cd4125fwd-IZ) has nice colour rendition, low blur even at close range. Digital zoom is quite good, too, but levels of detail are not as high as Cameras 6 and 7. In appearance the camera is re-rendering detail digitally. Digital zoom on Camera 8 at close ranges is quite good, though I can see some digital artefacts.

I’d rate Camera 6 best and Camera 7 next with not much in it between these 2 – colour temp is the main difference, with Camera 6 tending to cool. Camera 6 performs best with Norman, confirming its superiority in resolution. Cameras 6 and 7 are the most uniform in low light, too, best is 7, then 6, with Camera 8 running third.

Micro domes

Next come micro domes and these face a tough challenge, too. The tiny form factor means a small sensor and economisation of the camera system, while the challenging operational demands (long, wide scenes, movement at right angles, strong distant backlight, low light) are diametrically opposed to the required compromises of capability and you see this as soon as the group image comes up. All these cameras are soft. Blur is an issue with many, too, as is WDR performance. In the distance, there’s strong light (around 70,000 lux in my estimation) streaming through an entryway and given their location in the corridor, all the cameras are exposing for the internal view and over the external.

Camera 1 (Bosch Microdome IP 5000 HD) looks good. Sharpness is not bad. WDR is quite good, too. Blur is mild. Colour rendition is good. Even up close you are not getting sharp images with this camera but detail is certainly court admissible. There’s plenty of barrel distortion – maybe 15 per cent but it’s less than the rest. The zoom down the hall into light shows fundamental details but the blooming of backlight prevents longer views.

Camera 2 (Panasonic WV-SFN130) also has considerable barrel distortion, which is changing the shape of the scene and subjects within it. This camera is sharper – enough for face ID, I think, certainly enough to see an untied shoelace at close range – and WDR performance is better but that’s a relative thing. The image is cool blue but there’s reasonable colour rendition. Digital zoom proves the enhanced WDR performance but also shows the shortcomings of compact camera designs.

Camera 3 (Axis M3045-V) has nice colour rendition and exhibits almost no motion blur with pedestrians moving at right angles very close to the lens. Backlight performance at optical wide angle and digital zoom are extremely good. You not seeing faces near the entry but you have situational awareness. Camera 4 (Samsung SNV-L6013R) is showing motion blur indicative of reduced frame rate and pedestrians close to the lens moving at right angles have a double head. The image is softer than the other 2 and WDR is less capable than Camera 3 but it’s still good.

Camera 5 (Pelco IWP221-1ES) is the sharpest so far. Colour rendition is good, though WDR is no better than the others and barrel distortion is similarly high. Camera 6 (Arecont AV2555DN-S) has good colour rendition but is softer than others. WDR performance is not as strong. Camera 7 (Sony SNC-XM631) is soft but has good WDR performance and colour rendition.

Next, we do a shoe-lace test which indicates whether resolution and motion blur are controlled well enough to see if laces are tied in good light. Cameras 2 and 5 manage this. With Norman in the scene cameras 2, 5 and 7 show themselves to have the best digital zoom – Camera 5 is best of all. In low light, Camera 7 and Camera 1 are best with Camera 6 having gone to night mode but performing well. Camera 5 is next in performance.

Next, we run through digital zoom in low light conditions and at about 6m from the lens all the cameras but one, which is showing a very murky image, do quite well.

Omnidirectional cameras

The final camera group is the omnidirectional cameras and these are installed in a row in the carpark of the mall. At night, the scene is dark and very dark. During the day, it’s dark in some places and very bright in other places, with buckets of WDR. Viewing angles are huge depth of field is cavernous. Calling performance is tough with these cameras because of their different approach to the challenges of offering wide views – it’s no apples to apples match.

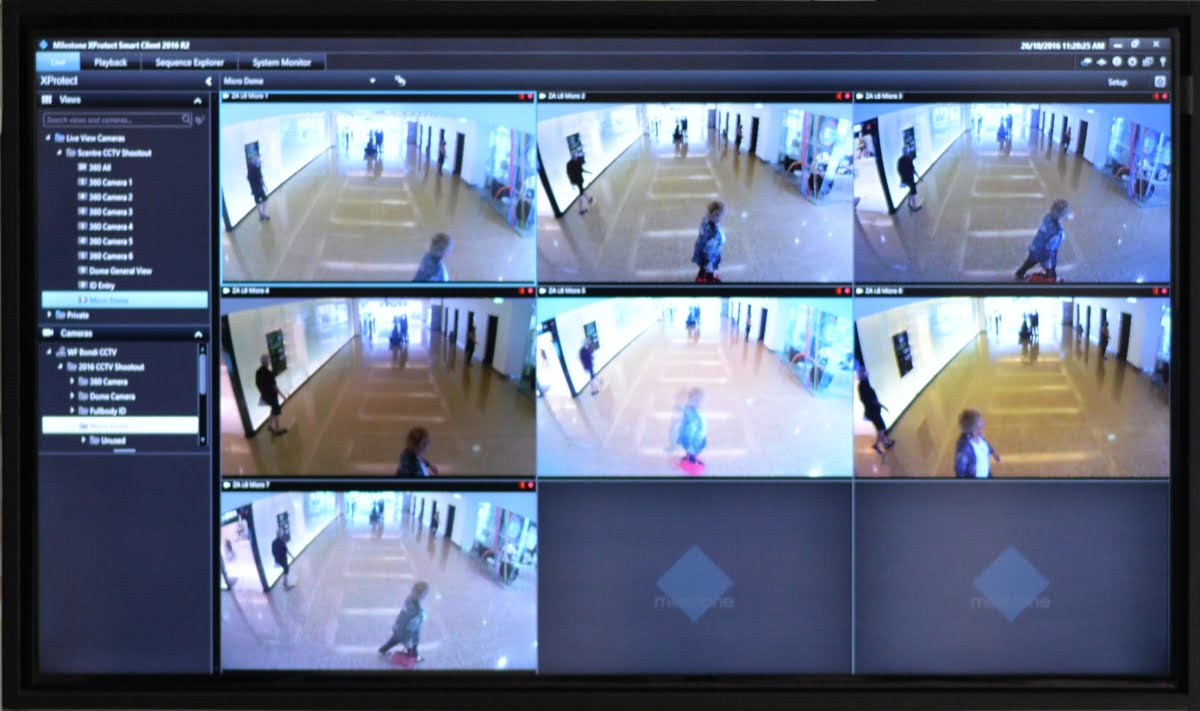

There are a couple of 360-degree hemispheric cameras and alongside them are a number of multi-head cameras. We are viewing in Milestone XProtect with each of these multi-head camera views shown as a separate tile – this is the way such cameras are using by Scentre Group. Such images can also be stitched into a single panoramic view.

Camera 1 (Panasonic WV-SFV481) is a hemispheric. We start viewing looking straight down but the operator steers the view up towards the horizon so we can look around in 360 degrees. The horizon is a little low and impacts on viewing distance very deep into scenes but the images are quite strong closer in – there’s obvious distortion on screen even though the image has been de-warped. Context is not fundamentally altered by angles are changed. WDR performance is challenged by the dark interior and bright exterior. Resolution is reasonably good, given the monstrous 360-degree viewing angle.

Camera 2 (Pelco IMM12036-1EP) is a multi-head and performance is different. Multi-head cameras each have HD resolutions but the lenses on these heads are wide so there’s some stretch and some softness. There’s some over exposure in areas of bright external light. There’s no face recognition of Norman at about 20m. Camera 3 (Arecont AV12275DN-28) is another multi-head. It shows barrel distortion, motion blur, relatively unbalanced images between light and dark, and it battles with WDR. Colour is quite heavily saturated.

Camera 4 (Samsung SNF-8010P) is a fisheye, with de-warped image streams. De-warping has an impact on images – there’s leaning-back distortion and the heavy processing demand tends to cause some motion blur. There’s some noise in this image, too. Depth of field is reasonably good but backlight from a dark interior generates biblical levels of blooming. We have Norman in one scene – the angle of view is simply enormous but detail of Norman is hard to come by. Again, this sort of camera works great in a store or foyer – installing one in a huge underground carpark asks questions resolution cannot answer. I wish Mobotix had brought a camera for comparison.

Camera 5 (Axis P3707-PE) is the best we’ve seen so far. This is a multi-sensor camera. There’s barrel distortion but colour rendition is good and lighting balance works well, too. Ok, we have face recognition for the first time. A motorbike rides by and there’s good control of blooming, too. And WDR! And detail of Norman! License plates of cars! Depth of field is solid. Some CAs but that happens.

Camera 6 (Bosch IP 7000 MP) is another panoramic. It has a quite low horizon – this camera needs to be installed high or on walls. The images are flattened and stretched by de-warping. Colour rendition is very natural. WDR performance is good. Resolution is pretty good. There’s some amplification noise. Depth of field is decent, too.

SEN’s opinion in this category is that Camera 5 is the best of the multi-head cameras and Camera 1 is the best of the panoramics.

Running through the same group at night is interesting. Performance is better without backlight in all cases. We also play with zoom a bit more, which gives a better sense of investigative capability. It’s 1.20am in the morning, so the only light is artificial – LED and fluoro given the colour temperature.

Camera 1 shows the same pattern of de-warping stretch and flattening. Depth of field is ok, image is generally soft, as most hemispherics are at wide angle. But zoom into the scene digitally and you get LPR at close and mid-ranges and probably face recognition, too, though we did not see it as no one was around. This tends to suggest the way we are viewing the images is impacting on appearance. The raw resolution is actually there. The further in you zoom the noisier and more smudgy the image gets but LPR remains and geometric perspective normalises. Looking up the driveway without 80,000 lux of daylight streaming in gives an idea of true depth of field – it’s pretty good. You are not getting plates or faces past 15-20m but you have situational awareness at the scene edges.

Camera 2 has gone to night mode in the gloomy carpark. The image is relatively noisy for a monochrome image and there’s some blooming relating to the strip lighting, even at distances of 15-20m. You are getting plates and getting them deeper than a hemispheric and at oblique angles. View 4 of Camera 2 is the most revealing. The depth of field has really opened up. Contrast is good. You are getting car make and model at 35m and more and situational awareness is strong. We can see all the way to the carpark perimeter.

Camera 3 has stayed in colour when it should not have done as things are gloomy. I’m not sure whether the camera is set to auto or locked in day mode. Annoyingly, the image actually looks to have considerable detail in it but it’s just too dark an image to see. Camera 4 is soft and noisy and is challenged by lights in the generally dark carpark. To handle the gloom, shutter speed has backed off and that means bright points are prone to blooming.

Camera 5 offers a bright image with some noise. There’s characteristic distortion. Sharpness is good – licence plates are obtainable at wide and tele zoom angles. This camera has some blooming and some flare in the lens and dome bubble but there’s good depth of field and colour rendition is solid, considering the patchy light and when compared to competitors. Night performance is not as strong as Camera 2, which is in night mode.

Camera 6 is another hemispheric but for some reason SEN has not got images of this camera’s performance characteristics aside from a single 360-degree shot at 1.24am, which shows good colour rendition and sharpness, as well as low noise and low blooming. Given this, night performance seems certain to mirror the quality of its daytime performance. There will be characteristic compression distortion in the presentation of de-warped images but depth of field is likely to be quite strong for its type. After going through the night recordings, we also undertook a WDR test using Norman and the best performers were Camera 1, Camera 5 and Camera 6.

Conclusions

The conclusion I drew after the event was that in the real world, with constraints on price and bandwidth, things are challenging for manufacturers, suppliers, integrators and end users. Parameters like cost butt heads with performance and certain applications have demands so specific it’s challenging for cameras to offer the operational flexibility they must through a 24-hour cycle. Regardless, it was possible to discern which cameras did better, although in a couple of cases there were setup issues that certainly saw otherwise capable cameras hamstrung in the conditions they faced. That’s the challenge of camera shootouts.

According to Pollak, part of the reason Scentre Group undertakes its National CCTV Camera Shootout is that as an organisation Scentre Group is committed to “bringing the bar up across the industry” when it comes to technical excellence.

“The more the end users, consultants, integrators and vendors take into account operational performance vectors, the better it works out for everyone,” Pollak says. “In closing, we’d like to thank Simon Syamando from JSC for putting in many nights of work getting the system set up – he did a great job with the installation. Secondly, Scentre Group extends a big thank you to all the vendors and integrators involved in building this test solution. We understand it takes a lot of time to get a very large shootout like this together on a working site and we are grateful for all your efforts.” ♦

By John Adams