Scentre Group’s CCTV Shootout held at Westfield Mall in Bondi Junction covered ID, mall and low profile cameras. Among other things, the shootout showed the vital importance of avoiding motion blur when you want face recognition.

SCENTRE Group’s CCTV shootout was great fun and really highlighted the challenges of quickly assessing the best camera in a large lineup. The application covers 3 key locations at Westfield Bondi Junction that typify challenges in many Scentre Group (Westfield) Malls. From the individual perspective, it’s about a definition of terms in the first instance, what does 'best' mean in relation to meeting a specification?

Is it best technical performance or best operational delivery? Is it best colour rendition, best contrast, best depth of field, or is it best face recognition thanks to appropriate shutter settings that ensure the lowest possible levels of motion blur, yet still contrive to allow the camera to handle areas of low light in a scene?

From the position of an observer, manufacturer or integrator, this idea of performance can be skewed from what best means to an end user. You really need to be across operational requirements in the way Scentre Group was. Westfield has 500 million customer visits to its stores annually nationally – it’s a big number. Scentre Group’s CCTV needs are well defined and there was a comprehensive specification handed to participants prior to the shootout.

Vendors, visitors and Scentre Group Staff

For Scentre Group’s CCTV Shootout, a large group of manufacturers and distributors has been invited to show their cameras and vendors have been given plenty of time to set their cameras up so as to give each on an equal opportunity to compete. A cross-section of some of the best brands are represented here, including Axis, Sony, Bosch, Canon, Panasonic, Samsung Techwin, Hikvision and Arecont Vision.

Once we’ve all met up onsite Yigal Shirin, senior risk & security manager at Westfield Bondi, walks us through the mall to take a look at the various camera locations. We take a look at the entry cameras, then a vertical mounting bracket onto which multiple large dome cameras have been installed – the way it’s all been done is fascinating to see.

“What we have here is our common mall camera and we chose this area because it’s high traffic, there’s different light levels as well as a mirror, the black underside of the escalators and an adjacent advertising screen that exposes the cameras to different reflective surfaces, making them work a bit more,” explains Shirin. “Everything is apples to apples, same resolution, same frame rate.”

Next, we look at compact cameras installed on a ceiling. The small ceiling mount cameras are being tested to see if they meet a number of qualities Scentre Group favours, including the ability to handle low ceiling heights, to give good colour rendition and face recognition and to have low motion blur.

Not the easiest subject to focus on…

It’s a fascinating setup and all the folks in attendance are enjoying themselves. It must be nerve-wracking for the manufacturers but everyone remains in good spirits throughout the process. As someone observes, you don’t get many opportunities like this, so there’s plenty of excitement on all sides. People love an objective test.

We all troop into the demo room to a welcome from John Yates, head of security for Westfield, who thanks camera vendors for their contribution to the Shootout process and explains that CCTV is one of the most important aspects in terms of the protection of the company’s centres.

“It’s hugely important we get the right quality and specification,” Yates explains. “We also want the best price, we want signals to be encrypted, we want our system to be future-proof, we want it to be auditable. What we are doing here today is giving everyone the best chance to present their cameras in the best light so we get the best chance to select the best cameras for our centres.”

Scentre Group manager of corporate security, Simon Pollak

Next, Scentre Group manager of corporate security, Simon Pollak explains the camera specifications and tells us these are update every 6 months to ensure the Group’s changing needs are constantly being met. According to Pollak, the test is designed to assess 3 different classes of cameras considered vitally important by Scentre Group’s security team – ID cameras for face recognition coming into centres, general mall cameras on the retail floor and micro domes for areas with lower ceilings.

"Our judging panel was specifically selected to include representatives from a variety of key user groups including security, risk management, design and construction, and technology, with each user rating the camera images on the criteria that were most important to them as users of the system," Pollak explains. "In this way, we were able to achieve a balanced representation of the operational requirements of our CCTV systems."

“As far as the way we will be judging the cameras, we put together a scoring criteria which takes into account price, image quality of primary and secondary stream and the potential of camera. We’ve applied restrictions in terms of frame rate and bandwidth – this relates to our storage capacity, as well as a desire to maintain a level playing field. In the first test cameras are limited to a 3Mbps stream but some have the ability to deliver improved performance if we have increased storage or our needs change. We also look at physical form factors and aesthetics.”

Scentre Group has prepared a copy of the scoring criteria so anyone who wants to can take notes on individual camera performance. Almost everyone in the room takes a form but they are hard to fill out. The key is to decide on criteria. What’s most valuable to the viewer? Contrast? Colour? Resistance to motion blur? Resistance to backlight? Or a combination of all of these?

As the test progresses many people fall into small groups of 2 or 3 and intuitively assess the camera performance – comparing their opinions with their neighbours. It’s not utterly objective but being objective is hard to do. Some things are easy to assess – colour rendition, saturation, depth of field, detail-enhancing contrast, resistance to motion blur. But all these things and others combine in different ways in a real world application like this one.

Standing in the demo room listening to and taking part in the chat around me it becomes clear very quickly there are so many variables that time is needed to be certain operational requirements are met. You need to undertake a process of elimination, to choose the 2-3 best cameras and focus very closely on those. Most groups around me are instinctively undertaking that initial process of picking out the top performers.

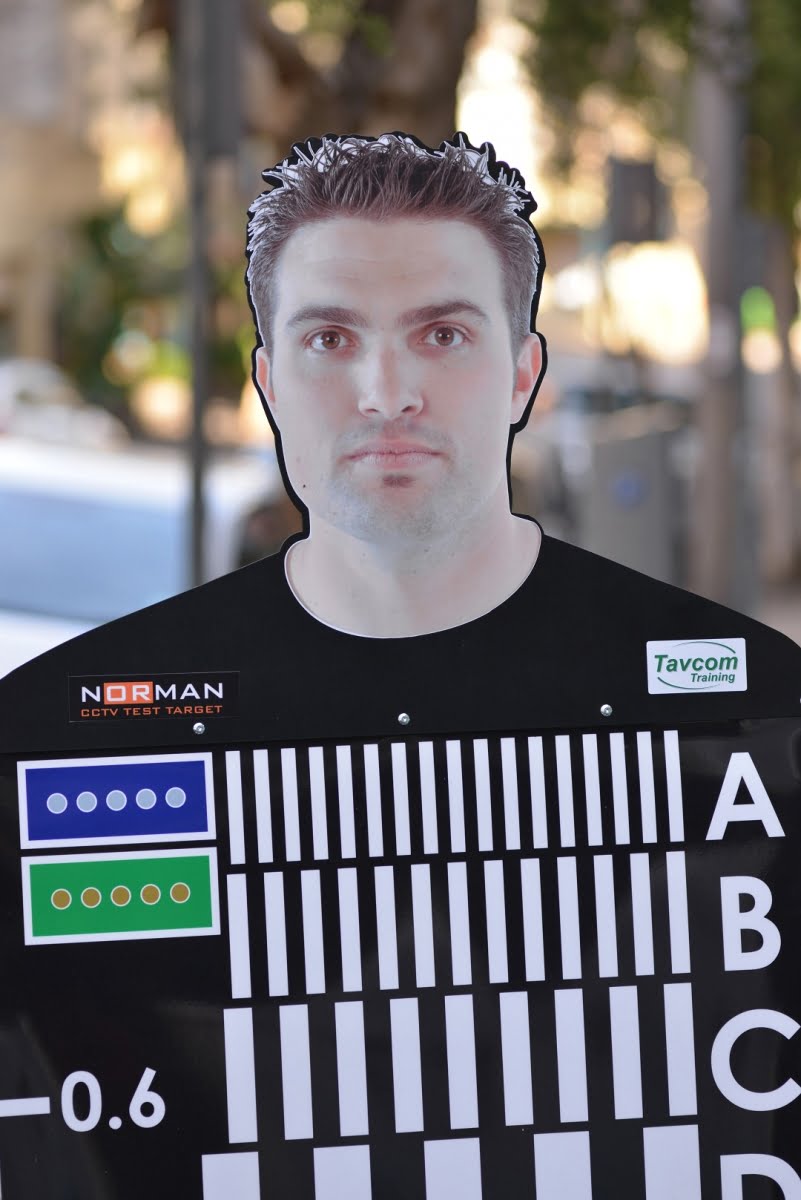

Norman – perenially disappointed he can't see the test monitor

We start with general mall cameras. While we’ve been talking, SEN’s Tavcom test target Norman has been wheeled into position in front of the first camera mount – the general mall cameras. We’re comparing things like variations in colour rendition, contrast between white and black bars, as well as being able to recognise Norman’s face. Deeper into the scene is a large red Coles sign and we’re also looking at the colours and feedback from digital display signage. People are moving through the area so we’re also concerned with how well the cameras handle face recognition, motion blur and colour rendition.

According to Pollak, entry cameras were 1080p resolution, 10 images per second and 3Mbps bit rate, while mall domes and compact domes were 720p, 8 images per second and 1.5Mbps bit rate. Scentre Group nominated the codec, but not shutter speed – movement will show uwho decided to do what with shutter speed. Generally speaking, the price of the cameras in each part of the test is comparable.

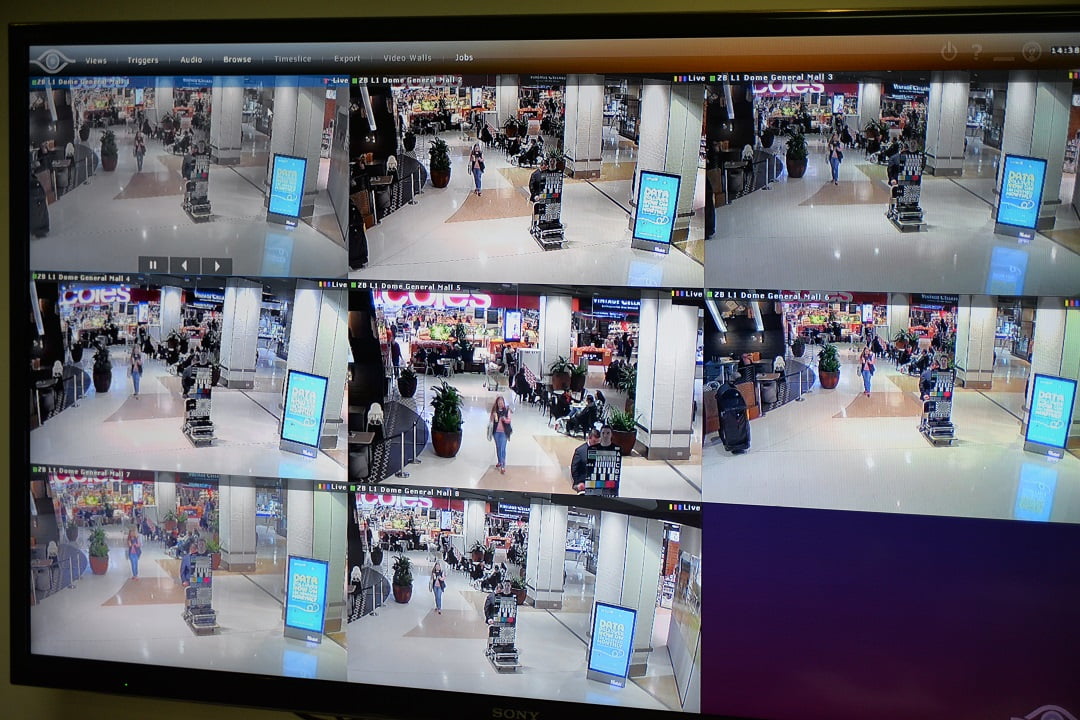

General mall domes

We start off looking at 8 cameras in a split screen and while there are some variations to be discerned, there’s not too much to come and go on. There are a couple of variations in focal length with the cameras but generally they all seem to be doing quite well in this scene. When we run through cameras in full frame (we can't show these as faces of customers are identifiable) you can see the differences much more clearly. Some of the images look quite different. Some are strong on colour but low on detail, while others have loads of detail but too much noise for the sort of scenes we are viewing. There are setup issues, too. Some have WDR on in order to handle bright points of light but this is introducing some noise artefacts. Some have focal points out.

Pollak tells us that the cameras were all set up with 48 hours and the vendors could come in and do some tweaks and adjustments for colour and backlight. Frankly, shopping centres are a tough gig, I think, as I watch the test unfolding. The scenes are deep, there’s loads of movement and colour variation, and with the bit rates clamped you are seeing things in a rather different way than they might otherwise unfold.

Next, we run through playback on the same cameras. Looking at the cameras as a group it’s hard to decide and listening to everyone else in the room, many others are finding this challenging. It’s easier once we go through the cameras in succession. Despite the flicker of the 60Hz Axis P3215-VE as it comes up on replay I can’t help saying that it’s a terrific image and there’s a simultaneous chorus from others praising its qualities. The depth of field is great on the P3215-VE and the general look of the image is strong.

Also strong in this group are Samsung, Panasonic, Hikvision and Bosch, the latter of which some one comments aloud saying it has a lovely balance of features – that’s important in this sort of application. The Samsung SND-5083P too, comes out well. It’s got a particular strength in face recognition and shows very low motion blur.

Once we’re done with these views and Norman is wheeled to the next camera view, we get stuck into the second category – entry ID. According to Shirin, this is the most important category for the Scentre Group team.

“On a day-to-day basis we want to identify people coming into the store with crisp, clear images,” he explains. “The problem we have is with backlight – it’s bad now but in summer at 1pm it would be far worse, so this camera range is vital to us.”

These are high end full body cameras. We kick off with the split screen and the first camera is slightly out of focus, which is a shame. Looking across them, there’s considerable difference between all the images. Some have different focal lengths, some show the blooming of overexposure outside but are getting good faces in the doorway, others do well in the doorway and have good depth of field, some are just ok in the door and contrive to peer all the way over the road in killer backlight. In this scene motion blur is important even though customers are walking towards the cameras. Not every camera is getting that part right.

Entry ID cameras

People in the room rise up in a buzz as we run through this group one camera at a time. Many like camera 4, which is the Axis P1365. Talking to the people around me, we agree camera 7 is also very strong with good colour and texture in backlight – this turns out to be the Hikvision Lightfighter. The Bosch camera is praised in this application and the Panasonic WV-SPN531 gets positive comments, too. Then camera number 8 gets a good rap – it’s the Samsung SNB-6004P.

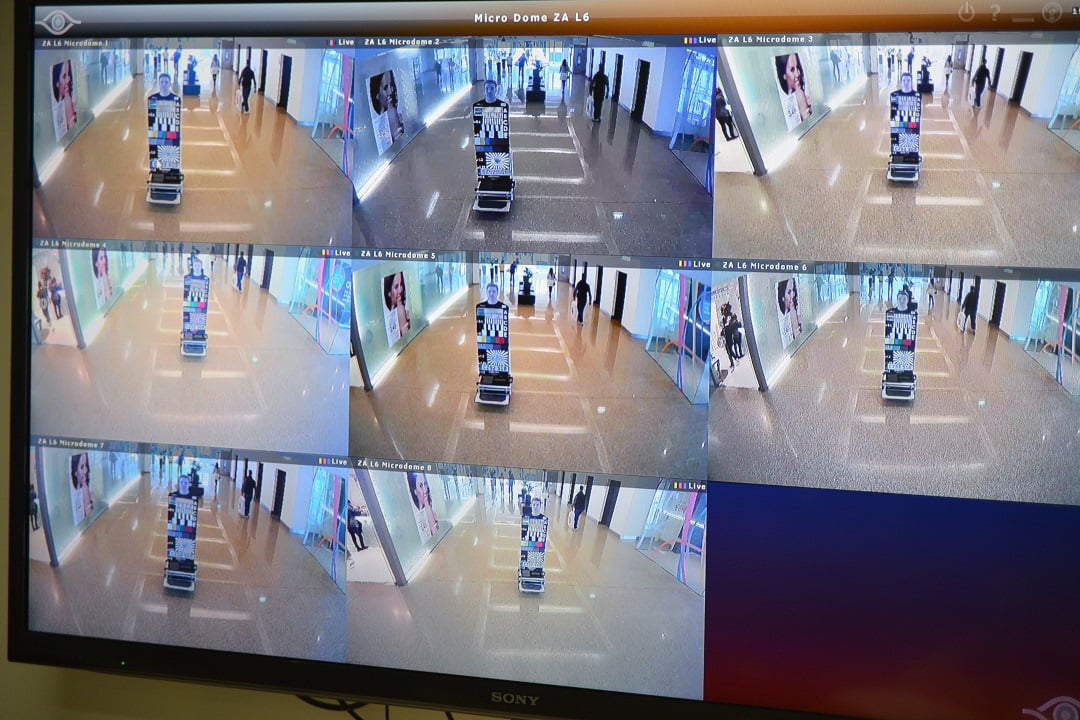

The last category is the micro dome camera, which Pollak points out is the category in which aesthetics plays the biggest role. These cameras are all 720p and 6fps, with bandwidth capped at 1.5Mbps. In this application the cameras are looking across an internal pedestrian intersection with low ceiling heights. There’s a doorway in the distance and a long walkway outside the door. Looking at the split screen, you can see some cameras are able to see outside the door and a long way down the walk to the carpark. Others do this less well but are performing very better at closer ranges, which as someone points out, is the key point of the specification.

In some ways, this is the most challenging application. From the point of view of perspective, the cameras are installed close to customers and much traffic is crossing the field of view at right angles. This is a severe test of motion blur and some cameras that otherwise perform brilliantly in this application are not always succeeding when it comes to handling it. What this means is that they are often failing to give face identification.

Colour rendition and colour balance in the presence of highlights is another point of difference for some cameras. There are also cameras with WDR activated in order to manage high points of light from the entrance and the reflective tile floor. This is impacting on aspects of their image quality. Generally speaking, all the cameras in this scene, which is deceptively deep, are making a reasonably good job of it.

Low profile cameras

My immediate call is 5, 6 and 7, though at this point no one has seen the setup sheet and we have no idea which tile is which camera. As it turns out, 5 is the Hikvision 2532F-IWS, 6 is the Sony XM631 and 7 is the Bosch Flexidome 500E. As we go through the full frame images, other cameras show their strengths, others show quirks like stronger than usual barrel distortion. It’s a tough challenge. Some cameras are getting shutter speed too slow and it’s leading to blooming from over-exposure and concurrent motion blur.

As we go through the full screen images I admire camera 3, which is the Canon VB-S805D, camera 4 is doing very well for face recognition – it’s the Samsung SNV-6013P and also handles motion blur very well, camera 5 has a great image that’s very well balanced but there’s some motion blur. At the end of this segment, I rate Camera 4 the highest but others around me have different ideas saying its colour isn’t as true as some others. When we go through the recordings I again like camera 3 and there’s broad agreement camera 4 is a good balance. Camera 5 is considered to have good colour with a lack of motion blur. Meanwhile, camera 6 has a great depth of field but a little motion blur, camera 7 has very natural colour but some blur and we agree camera 8, the Panasonic, is good, too.

In the real world you really notice things like motion blur and when focusing on that part of the specification things that might appeal during a static camera test fade into the background. As we finish up, the room is in a hubbub of talk about which camera did best and how valuable this sort of objective test can be.

I don’t think anyone came away from this quick test – Scentre Group subsequently undertook days of evaluation – with a sense of exactly which camera was best in each application. But we were all thinking about the nature of camera performance and relating it to operational demands. This was a fascinating event – a credit to Scentre Group staff, as well as the vendors and technicians involved.♦

By John Adams